Trust in Multi-Party Human-Robot Interaction

In this project, we designed and evaluated a novel framework for robot mediation of a support group. We conducted a user study using an NAO robot mediator controlled by a human operator that is unseen by the participants (Wizard-of-Oz). At the end of each study, the participants are asked to annotate their trust towards other participants in the study session recordings. In a second-author paper at International Conference on Robotics and Automation (ICRA), we showed that using a robot could significantly increase the average interpersonal trust after the group interaction session.

The following project description is taken from Interaction Lab website.

Within the field of Human-Robot Interaction (HRI), a growing subfield is forming that focuses specifically on interactions between one or more robots and multiple people, known as Multi-Party Human-Robot Interaction (MP-HRI). MP-HRI encompasses the challenges of single-user HRI (interaction dynamics, human perception, etc.) and extends them to the challenges of multi-party interactions (within-group turn taking, dyadic dynamics, and group dynamics).

To address these, MP-HRI requires new methods and approaches. Effective MP-HRI enables robotic systems to function in many contexts, including service, support, and mediation. In realistic human contexts, service and support robots need to work with varying numbers of individuals, particularly when working within team structures. In mediation, robotic systems must by definition, be able to work with multiple parties. These contexts often overlap, and algorithms that work in one context can benifit work in another.

This project will advance the basic research in trust and influence in MP-HRI contexts. This will involve exploring how robots and people establish, maintain, and repair trust in MP-HRI. Specifically, this research will examine robot group mediation for group conseling, with extensions to team performance in robot service and support teams.

Study Design

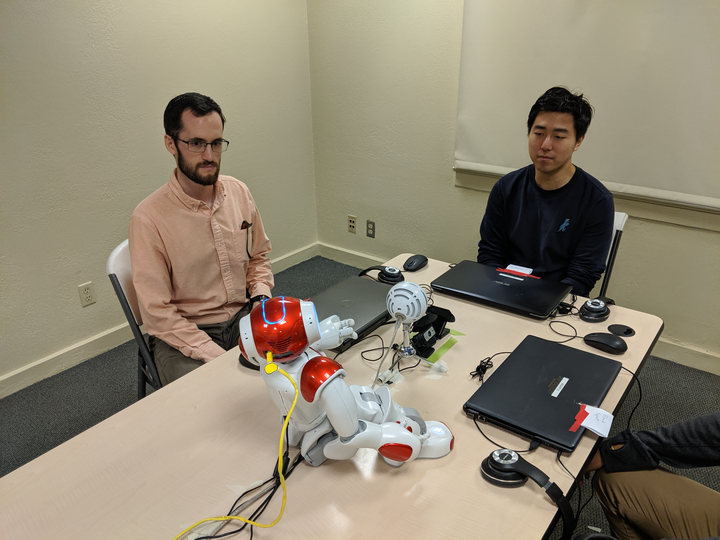

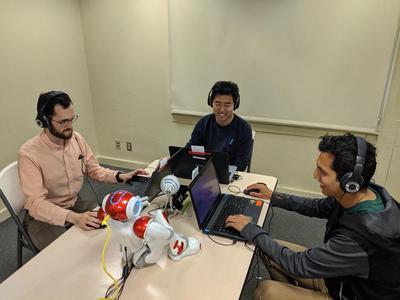

In each study session, three participants were seated around the end of a table with a seated NAO robot as shown in Figure 1. The NAO robot, acting as a group moderator, was positioned towards the participants. On the table, a 360-degree microphone and 3 cameras facing directly to the participants' face were placed. Behind the robot, an RGB-D camera was mounted on a tripod to record the interactions between the group members. The robot operator was seated behind a one-way mirror hidden from participants.

| Sensitivity | Question | Disclosure |

|---|---|---|

| Low | What do you like about school? | When I feel stressed, I think my circuits might overload. Does anyone else feel the same way? |

| Medium | What are some of the hardest parts of school for you? | Sometimes I worry I am inadequate for this school. Does anyone else sometimes feel that too? |

| Hard | What will happen if you don’t succeed in school? | Sometimes I worry about if I belong here. Does anyone else feel the same way? |

During the interaction, the robot can ask questions or make disclosures. A total of 16 questions and 6 disclosures are available. On average, 12 questions and 3 disclosures were made by the robot in each session.

The questions and disclosures are grouped into low, medium, and high sensitivity as illustrated in the below table.

To measure how the level of trust changes overtime, the participants were asked to report their trust towards other participants against the recordings of the current session after the group interaction.

The detail for the procedure of the study can be found here.

Development Detail

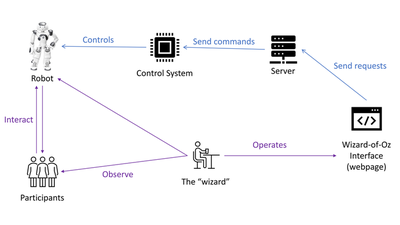

As shown in Figure 3. The wizard controls the robot through the Wizard-of-Oz web interface.

For project development, my contributions includes:

- Developed NAO control program

- Designed and implemented web-based Wizard of Oz controller

- Designed and implemented self-annotation website

- Developed data collection program for one depth camera, three webcams and one 360 degree microphone

- Data post-processing for data whitening and fast data loading

- Turn-taking prediction with Temporal Convolutional Networks (TCN) and LSTM for multi-modal input